Result How to Fine-Tune Llama 2 In this part we will learn about all the steps required to fine-tune the Llama 2 model with 7 billion parameters. Contains examples script for finetuning and inference of the Llama 2 model as well as how to use them safely. Result The following tutorial will take you through the steps required to fine-tune Llama 2 with an example dataset using the Supervised Fine-Tuning SFT approach. Result In this guide well show you how to fine-tune a simple Llama-2 classifier that predicts if a texts sentiment is positive neutral or negative. Result In this notebook and tutorial we will fine-tune Metas Llama 2 7B Watch the accompanying video walk-through but for Mistral here If youd like to see that..

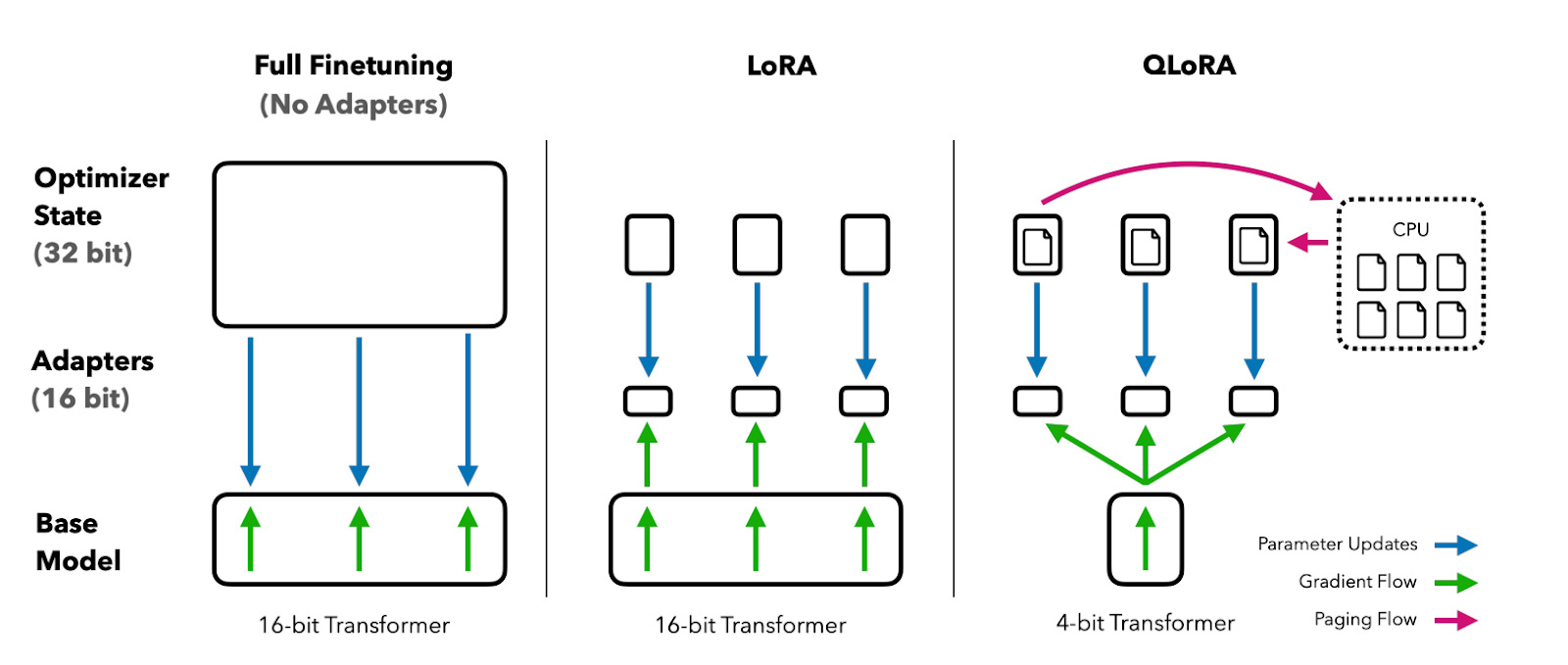

. . This repo contains GGUF format model files for Togethers Llama2 7B 32K. Result Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with. Result NF4 is a static method used by QLoRA to load a model in 4-bit precision to. The main goal of llamacpp is to enable LLM inference with minimal setup. . ..

. Web Original model elyzaELYZA-japanese-Llama-2-7b-instruct which is based on Metas Llama 2 and has undergone. Install WasmEdge via the following command line. Web Original model elyzaELYZA-japanese-Llama-2-7b-fast-instruct which is based on Metas Llama 2 and has. Web こんにちは ELYZAの研究開発チームの佐々木中村平川堀江です この度ELYZAはMetaのLlama 2. . Web ELYZA-japanese-Llama-2-7b は 株式会社ELYZA 以降当社と呼称 が Llama2 をベースとして日本語能力を拡張するため. You can create a release to package software along with release notes and..

Medium balanced quality - prefer using Q4_K_M. Description This repo contains GGUF format model files for Metas Llama 2 7B About GGUF GGUF is a new format introduced by the llamacpp team on August 21st 2023. Initial GGUF model commit models made with llamacpp commit bd33e5a 75c72f2 6 months ago. Lets get our hands dirty and download the the Llama 2 7B Chat GGUF model After opening the page download the llama-27b-chatQ2_Kgguf file which is the most. GGUF is a new format introduced by the llamacpp team on August 21st 2023 It is a replacement for GGML which is no longer supported by llamacpp..

Comments