Run Llama 2 70b On Your Gpu With Exllamav2

LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM. Mem required 2294436 MB 128000 MB per state I was using q2 the smallest version That ram is going to be tight with 32gb. 381 tokens per second - llama-2-13b-chatggmlv3q8_0bin CPU only. Opt for a machine with a high-end GPU like NVIDIAs latest RTX 3090 or RTX 4090 or dual GPU setup to accommodate the. We target 24 GB of VRAM If you use Google Colab you cannot run it..

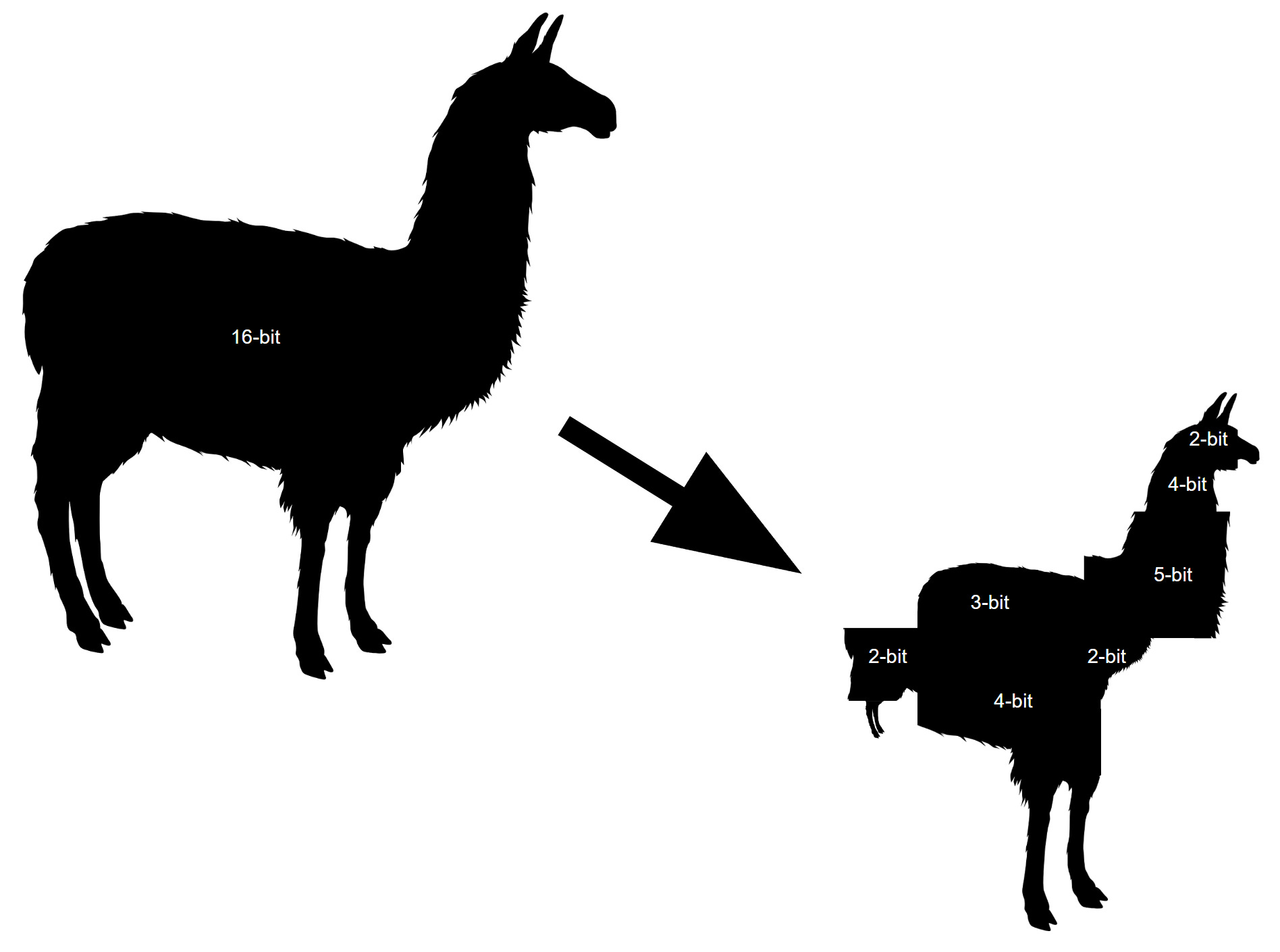

Llama 2 7B - GGML Model creator Llama 2 7B Description This repo contains GGML format. Llama 2 is here - get it on Hugging Face a blog post about Llama 2 and how to use it with Transformers and PEFT. . We used it to quantize our own Llama model in different formats Q4_K_M and Q5_K_M..

Customize Llamas personality by clicking the settings button I can explain concepts write poems and code. Experience the power of Llama 2 the second-generation Large Language Model by Meta. Llama 2 is a product of cutting-edge AI technology Its a large language model that uses machine learning to generate. Llama 2 is being released with a very permissive community license and is available for..

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large. Llama 2 is a family of pre-trained and fine-tuned large language models LLMs released by Meta AI in. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models..

Comments